Prevent Tragedies - How to Avoid Catastrophe and RISK MANAGEMENT modules, AND THE PROACTIVE SAFETY METHOD, RISKS AND EMERGENCIES

How to Avoid Catastrophe

by Catherine H. Tinsley, Robin L. Dillon, and Peter M. Madsen

From the Magazine (April 2011)

Summary.

Most business failures—such as engineering disasters, product malfunctions, and PR crises—are foreshadowed by near misses, close calls that, had luck not intervened, would have had far worse consequences. The space shuttle Columbia’s fatal reentry, BP’s Gulf oil rig disaster, Toyota’s stuck accelerators, and even the iPhone 4’s antenna failures—all were preceded by near-miss events that should have tipped off managers to impending crises. The problem is that near misses are often overlooked—or, perversely, viewed as a sign that systems are resilient and working well. That’s because managers are blinded by cognitive biases, argue Georgetown professors Tinsley and Dillon, and Brigham Young University’s Madsen.

Seven strategies can help managers recognize and learn from near misses: They should be on increased alert when time or cost pressures are high; watch for deviations in operations from the norm and uncover their root causes; make decision-makers accountable for near misses; envision worst-case scenarios; be on the lookout for near-misses masquerading as successes, and reward individuals for exposing near misses.

--------

Consider the BP Gulf oil rig disaster. As a case study in the anatomy of near misses and the consequences of misreading them, it’s close to perfect. In April 2010, a gas blowout occurred during the cementing of the Deepwater Horizon well. The blowout ignited, killing 11 people, sinking the rig, and triggering a massive underwater spill that would take months to contain. Numerous poor decisions and dangerous conditions contributed to the disaster: Drillers had used too few centralizers to position the pipe, the lubricating “drilling mud” was removed too early, and managers had misinterpreted vital test results that would have confirmed that hydrocarbons were seeping from the well. In addition, BP relied on an older version of a complex fail-safe device called a blowout preventer that had a notoriously spotty track record.

Why did Transocean (the rig’s owner), BP executives, rig managers, and the drilling crew overlook the warning signs, even though the well had been plagued by technical problems all along (crew members called it “the well from hell”)? We believe that the stakeholders were lulled into complacency by a catalog of previous near misses in the industry—successful outcomes in which luck played a key role in averting disaster. Increasing numbers of ultradeep wells were being drilled, but significant oil spills or fatalities were extremely rare. And many Gulf of Mexico wells had suffered minor blowouts during cementing (dozens of them in the past two decades); however, in each case chance factors—favorable wind direction, no one welding near the leak at the time, for instance—helped prevent an explosion. Each near miss, rather than raise alarms and prompt investigations, was taken as an indication that existing methods and safety procedures worked.

For the past seven years, we have studied near misses in dozens of companies across industries from telecommunications to automobiles, at NASA, and in lab simulations. Our research reveals a pattern: Multiple near misses preceded (and foreshadowed) every disaster and business crisis we studied, and most of the misses were ignored or misread. Our work also shows that cognitive biases conspire to blind managers to near misses. Two in the particular cloud our judgment. The first is “normalization of deviance,” the tendency over time to accept anomalies—particularly risky ones—as normal. Think of the growing comfort a worker might feel with using a ladder with a broken rung; the more times he climbs the dangerous ladder without incident, the safer he feels it is. For an organization, such normalization can be catastrophic. Columbia University sociologist Diane Vaughan coined the phrase in her book The Challenger Launch Decision to describe the organizational behaviors that allowed a glaring mechanical anomaly on the space shuttle to gradually be viewed as a normal flight risk—dooming its crew. The second cognitive error is the so-called outcome bias. When people observe successful outcomes, they tend to focus on the results more than on the (often unseen) complex processes that led to them.

Recognizing and learning from near misses isn’t simply a matter of paying attention; it actually runs contrary to human nature. In this article, we examine near misses and reveal how companies can detect and learn from them. By seeing them for what they are—instructive failures—managers can apply their lessons to improve operations and, potentially, ward off catastrophe.

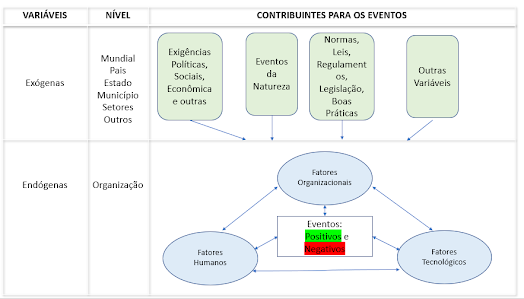

Organizational disasters, studies show, rarely have a single cause. Rather, they are initiated by the unexpected interaction of multiple small, often seemingly unimportant, human errors, technological failures, or bad business decisions. These latent errors combine with enabling conditions to produce a significant failure. A latent error on an oil rig might be a cementing procedure that allows gas to escape; enabling conditions might be a windless day and a welder working near the leak. Together, the latent error and enabling conditions ignite a deadly firestorm. Near misses arise from the same preconditions, but in the absence of enabling conditions, they produce only small failures and thus go undetected or are ignored.

Latent errors often exist for long periods before they combine with enabling conditions to produce a significant failure. Whether an enabling condition transforms a near miss into a crisis generally depends on chance; thus, it makes little sense to try to predict or control enabling conditions. Instead, companies should focus on identifying and fixing latent errors before circumstances allow them to create a crisis.

Recognizing and Preventing Near Misses

Our research suggests seven strategies that can help organizations recognize near misses and root out the latent errors behind them. We have developed many of these strategies in collaboration with NASA—an organization that was initially slow to recognize the relevance of near misses but is now developing enterprisewide programs to identify, learn from, and prevent them.

1. Heed High Pressure.

The greater the pressure to meet performance goals such as tight schedules, cost, or production targets, the more likely managers are to discount near-miss signals or misread them as signs of sound decision-making. BP’s managers knew the company was incurring overrun costs of $1 million a day in rig lease and contractor fees, which surely contributed to their failure to recognize warning signs. The high-pressure effect also contributed to the Columbia space shuttle disaster, in which insulation foam falling from the external fuel tank damaged the shuttle’s wing during liftoff, causing the shuttle to break apart as it reentered the atmosphere. Managers had been aware of the foam issue since the start of the shuttle program and were concerned about it early on, but as dozens of flights proceeded without serious mishaps, they began to classify foam strikes as maintenance issues—rather than as near misses. This classic case of normalization of deviance was exacerbated by the enormous political pressure the agency was under at the time to complete the International Space Station’s main core. Delays on the shuttle, managers knew, would slow down the space station project.

Despite renewed concern about foam strikes caused by a particularly dramatic recent near miss, and with an investigation underway, the Columbia took off. According to the Columbia Accident Investigation Board, “The pressure of maintaining the flight schedule created a management atmosphere that increasingly accepted less-than-specification performance of various components and systems.”

When people make decisions under pressure, psychological research shows, they tend to rely on heuristics or rules of thumb, and thus are more easily influenced by biases. In high-pressure work environments, managers should expect people to be more easily swayed by outcome bias, more likely to normalize deviance, and more apt to believe that their decisions are sound. Organizations should encourage, or even require, employees, to examine their decisions during pressure-filled periods and ask, “If I had more time and resources, would I make the same decision?”

2. Learn from Deviations.

As the Toyota and JetBlue crises suggest, managers’ response when some aspect of operations skews from the norm is often to recalibrate what they consider acceptable risk. Our research shows that in such cases, decision-makers may clearly understand the statistical risk represented by the deviation, but grow increasingly less concerned about it. We’ve seen this effect clearly in a laboratory setting. Turning again to the space program for insight, we asked study participants to assume operational control of a Mars rover in a simulated mission. Each morning they received a weather report and had to decide whether or not to drive onward. On the second day, they learned that there was a 95% chance of a severe sandstorm, which had a 40% chance of causing catastrophic wheel failure. Half the participants were told that the rover had successfully driven through sandstorms in the past (that is, it had emerged unscathed in several prior near misses); the other half had no information about the rover’s luck in past storms. When the time came to choose whether or not to risk the drive, three-quarters of the near-miss group opted to continue driving; only 13% of the other group did. Both groups knew, and indeed stated that they knew, that the risk of failure was 40%—but the near-miss group was much more comfortable with that level of risk.

Managers should seek out operational deviations from the norm and examine whether their reasons for tolerating the associated risk have merit. Questions to ask might be: Have we always been comfortable with this level of risk? Has our policy toward this risk changed over time?

3. Uncover Root Causes.

When managers identify deviations, their reflex is often to correct the symptom rather than its cause. Such was Apple’s response when it at first suggested that customers address the antenna problem by changing the way they held the iPhone. NASA learned this lesson the hard way as well, during its 1998 Mars Climate Orbiter mission. As the spacecraft headed toward Mars it drifted slightly off course four times; each time, managers made small trajectory adjustments, but they didn’t investigate the cause of the drifting. As the $200 million spacecraft approached Mars, instead of entering into orbit, it disintegrated in the atmosphere. Only then did NASA uncover the latent error—programmers had used English rather than metric units in their software coding. The course corrections addressed the symptom of the problem but not the underlying cause. Their apparent success lulled decision-makers into thinking that the issue had been adequately resolved. The health care industry has made great strides in learning from near misses and offers a model for others. Providers are increasingly encouraged to report mistakes and near misses so that the lessons can be teased out and applied. An article in Today’s Hospitalist, for example, describes a near miss at Delnor-Community Hospital, in Geneva, Illinois. Two patients sharing a hospital room had similar last names and were prescribed drugs with similar-sounding names—Cytotec and Cytoxan. Confused by the similarities, a nurse nearly administered one of the drugs to the wrong patient. Luckily, she caught her mistake in time and filed a report detailing the close call. The hospital immediately separated the patients and created a policy to prevent patients with similar names from sharing rooms in the future.

4. Demand Accountability.

Even when people are aware of near misses, they tend to downgrade their importance. One way to limit this potentially dangerous effect is to require managers to justify their assessments of near misses. Remember Chris, the fictional manager in our study who neglected some due diligence in his supervision of a space mission? Participants gave him equally good marks for the success scenario and the near-miss scenario. Chris’s raters didn’t seem to see that the near miss was in fact a near disaster. In a continuation of that study, we told a separate group of managers and contractors that they would have to justify their assessment of Chris to upper management. Knowing they’d have to explain their rating to the bosses, those evaluating the near-miss scenario judged Chris’s performance just as harshly as did those who had learned the mission had failed—recognizing, it seems, that rather than managing well, he’d simply dodged a bullet.

5. Consider Worst-Case Scenarios.

Unless expressly advised to do so, people tend not to think through the possible negative consequences of near misses. Apple managers, for example, were aware of the iPhone’s antenna problems but probably hadn’t imagined how bad a consumer backlash could get. If they had considered a worst-case scenario, they might have headed off the crisis, our research suggests. In one study, we told participants to suppose that an impending hurricane had a 30% chance of hitting their house and asked them if they would evacuate. Just as in our Mars rover study, people who were told that they’d escaped disaster in previous near misses were more likely to take a chance (in this case, opting to stay home). However, when we told participants to suppose that, although their house had survived previous hurricanes, a neighbor’s house had been hit by a tree during one, they saw things differently; this group was far more likely to evacuate. Examining events closely helps people distinguish between near misses and successes, and they’ll often adjust their decision-making accordingly.

Managers in Walmart’s business-continuity office clearly understand this. For several years before Hurricane Katrina, the office had carefully evaluated the previous hurricane near misses of its stores and infrastructure and, based on them, planned for a direct hit to a metro area where it had a large presence. In the days before Katrina made landfall in Louisiana, the company expanded the staff of its emergency command center from the usual six to 10 people to more than 50, and stockpiled food, water, and emergency supplies in its local warehouses. Having learned from prior near misses, Walmart famously outperformed local and federal officials in responding to the disaster. Said Jefferson Parish Sheriff Harry Lee, “If the American government had responded like Walmart has responded, we wouldn’t be in this crisis.”

6. Evaluate Projects at Every Stage.

When things go badly, managers commonly conduct postmortems to determine causes and prevent recurrences. When they go well, however, few do formal reviews of the success to capture its lessons. Because near misses can look like successes, they often escape scrutiny.

The chief knowledge officer at NASA’s Goddard Space Flight Center, Edward Rogers, instituted a “pause and learn” process in which teams discuss at each project milestone what they have learned. They not only cover mishaps but also expressly examine perceived successes and the design decisions considered along the way. By critically examining projects while they’re underway, teams avoid outcome bias and are more likely to see near misses for what they are. These sessions are followed by knowledge-sharing workshops involving a broader group of teams. Other NASA centers, including the Jet Propulsion Laboratory, which manages NASA’s Mars program, are beginning similar experiments. According to Rogers, most projects that have used the pause-and-learn process have uncovered near misses—typically, design flaws that had gone undetected. “Almost every mishap at NASA can be traced to some series of small signals that went unnoticed at the critical moment,” he says.

7. Reward Owning Up.

Seeing and attending to near misses requires organizational alertness, but no amount of attention will avert failure if people aren’t motivated to expose near misses—or, worse, are discouraged from doing so. In many organizations, employees have reason to keep quiet about failures, and in that type of environment, they’re likely to keep suspicions about near misses to themselves.

Political scientists Martin Landau and Donald Chisholm described one such case that, though it took place on the deck of a warship, is relevant to any organization. An enlisted seaman on an aircraft carrier discovered during a combat exercise that he’d lost a tool on the deck. He knew that an errant tool could cause a catastrophe if it were sucked into a jet engine, and he was also aware that admitting the mistake could bring a halt to the exercise—and potential punishment. As long as the tool was unaccounted for, each successful takeoff and landing would be a near miss, a lucky outcome. He reported the mistake, the exercise was stopped, and all aircraft aloft were redirected to bases on land, at a significant cost.

Rather than being punished for his error, the seaman was commended by his commanding officer in a formal ceremony for his bravery in reporting it. Leaders in any organization should publicly reward staff for uncovering near misses—including their own.

Two forces conspire to make learning from near misses difficult: Cognitive biases make them hard to see, and, even when they are visible, leaders tend not to grasp their significance. Thus, organizations often fail to expose and correct latent errors even when the cost of doing so is small—and so they miss opportunities for organizational improvement before disaster strikes. This tendency is itself a type of organizational failure—a failure to learn from “cheap” data. Surfacing near misses and correcting root causes is one of the soundest investments an organization can make.

Comentários

Postar um comentário