Prevent Tragedies - Highlights of James Reason, Human Error and RISK MANAGEMENT modules, AND THE PROACTIVE SAFETY METHOD, RISKS AND EMERGENCIES

Highlights of James Reason, Human Error:

Chernobyl:

The fundamental attribution error has been widely studied in social psychology. This refers to a pervasive tendency to blame bad outcomes on an actor’s personal inadequacies (i.e., dispositional factors) rather than attribute them to situational factors beyond his or her control. Such tendencies were evident in both the Russian and the British responses to the Chernobyl accident. Thus, the Russian report on Chernobyl (USSR State Committee on the Utilization of Atomic Energy, 1986) concluded that: “The prime cause of the accident was an extremely improbable combination of violations of instructions and operating rules.” Lord Marshall, Chairman of the U.K. Central Electricity Generating Board (CEGB), wrote a foreword to the U.K. Atomic Energy Authority’s report on the Chernobyl accident (UKAEA, 1987), in which he assigned blame in very definite terms: “To us in the West, the sequence of reactor operator errors is incomprehensible. Perhaps it came from supreme arrogance or complete ignorance. More plausibly, we can speculate that the operators as a matter of habit had broken rules many, many times and got away with it so the safety rules no longer seemed relevant.” Could it happen in the U.K.? “My own judgment is that the overriding importance of ensuring safety is so deeply ingrained in the culture of the nuclear industry that this will not happen in the U.K.”

TMI:

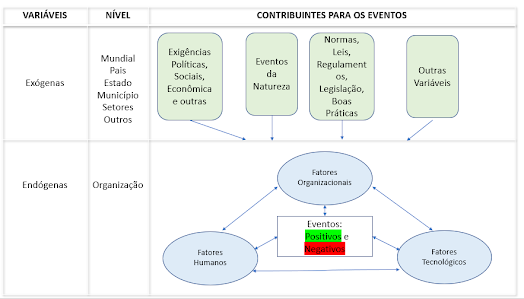

The TMI accident was more than an unexpected progression of faults; it was more than a situation planned for but handled inadequately; it was more than a situation whose plan had proved inadequate. The TMI accident constituted a fundamental surprise in that it revealed a basic incompatibility between the nuclear industry’s view of itself and reality. Prior to TMI the industry could and did think of nuclear power as a purely technical system where all the problems were in the form of some technical area or areas and the solutions to these problems lay in those engineering disciplines. TMI graphically revealed the inadequacy of that view because the failures were in the socio-technical system and not due to pure technical nor pure human factors.

-----

The Rogovin inquiry (Rogovin, 1979), for example, discovered that the TMI accident had ‘almost happened’ twice before, once in Switzerland in 1974 and once at the Davis-Besse plant in Ohio in 1977. Similarly, an Indian journalist wrote a prescient series of articles about the Bhopal plant and its potential dangers three years before the tragedy (see Marcus & Fox, 1988).

Other unheeded warnings were also available prior to the Challenger, Zeebrugge and King’s Cross accidents.

Before judging too harshly the human failings that concatenate to cause a disaster, we need to make a clear distinction between the way the precursors appear now, given knowledge of the unhappy outcome, and the way they seemed at the time.

But before we rush to judgment, there are some important points to be kept in mind. First, most of the people involved in serious accidents are neither stupid nor reckless, though they may well have been blind to the consequences of their actions. Second, we must beware of falling prey to the fundamental attribution error (i.e., blaming people and ignoring situational factors). As Perrow (1984) argued, it is in the nature of complex, tightly coupled systems to suffer unforeseeable sociotechnical breakdowns. Third, before beholding the mote in his brother’s eye, the retrospective observer should be aware of the beam of hindsight bias in his own.

Comentários

Postar um comentário